The journey of adopting Large Language Models (LLMs) in the enterprise is an evolution, much like learning to drive a car. Early interactions may feel somewhat manual and require constant input, but as the technology matures, it becomes a powerful, collaborative partner that can handle complex workflows under human supervision. Understanding this evolution is key to building a practical strategy that delivers value at every stage without sacrificing control.

Stage 1: Foundational LLMs (Manual Driving)

For many companies, the first stage of LLM use has been like driving a manual car—you must spell out every instruction. Prompts like “Summarize this” or “Draft an email marketing campaign” are powerful but transactional. The LLM executes a single task and then stops, retaining no memory or context for a follow-up request. This is the baseline, offering immediate productivity boosts for discrete tasks, but the human driver is doing all the steering.

Stage 2: Enhanced LLMs (Driver-Assist Features)

The second stage introduces “driver-assist” features that make the core engine smarter and more reliable. This isn't one single technology, but typically a combination of capabilities. For instance, combining: Chain-of-Thought reasoning and Retrieval-Augmented Generation (RAG) features has been a powerful combination for many companies.

- Chain-of-Thought (CoT): Chain-of-Thought allows an LLM to "think" step-by-step. Instead of jumping directly to an answer, the model breaks down a complex query into a logical sequence of reasoning steps, much like how a person would work through a problem on paper. This ability to handle intermediate steps is a prerequisite for tackling any workflow that requires more than a single action.

- Retrieval-Augmented Generation (RAG): Nobody wants an AI that sounds confident while delivering fiction. RAG addresses this by grounding the LLM in verifiable facts. When prompted, a RAG-enabled system first retrieves relevant information from a trusted knowledge source—like a vector database, a document library, or an internal wiki. This retrieved data is then included in the prompt, instructing the model to base its answer on the provided facts. This ensures that answers are not only current and accurate but can also cite their sources, which is critical for building trust and meeting compliance requirements.

Stage 3: Multi-Capability Systems (The Professional Crew)

In the third stage of maturity, organizations move from a single, enhanced engine to a collaborative team of specialized capabilities. While some call this a Multi-Agent System (MAS), it’s best understood as a framework for orchestrating multiple tools and functions to achieve a complex goal. The core principle is the division of labor. Instead of one AI trying to do everything, you have a system that can intelligently use a variety of capabilities:

- Specialized Tools: Different components handle different jobs. One capability might call a CRM API to get customer data, another might query a policy database using RAG, and a third might execute a financial calculation.

- Parallel Processing: Multiple tasks can be performed simultaneously, dramatically speeding up complex processes like onboarding a customer or processing an insurance claim.

- Modularity and Extensibility: New capabilities—whether they are new agents, APIs, or integrations with other software—can be added to the system without having to redesign the entire workflow.

This “digital crew” approach is inherently more powerful and flexible than a single AI, especially for solving the complex, multi-step problems that define modern business operations.

Essential Capabilities for Enterprise-Grade Systems

To be truly effective and trustworthy in a business context, these multi-capability systems require a robust set of features that guarantee control and visibility. While several frameworks exist to build these systems, enterprises should look for solutions that provide:

- Structured & Observable Workflows: The ability to define a clear, predictable path for how a task is completed. This often takes the form of a directed graph, where each step (node) and transition (edge) is explicitly defined and logged. This prevents the AI from "wandering" and provides a clear audit trail for every action taken.

- Human-in-the-Loop (HITL) Control: No business will hand over the keys entirely. A critical feature is the ability to insert human approval gates at any crucial decision point. This allows a manager or compliance officer to review outputs, request changes, or approve actions before the workflow proceeds, ensuring humans have the final say. If an error occurs, the system should allow a user to "rewind" to a previous step and retry, rather than starting from scratch.

- Centralized State & Context Management: For a team to work together, it needs a shared understanding of the task. The system must maintain context—like customer details or case history—across multiple steps and sessions, ensuring a coherent and seamless workflow from start to finish.

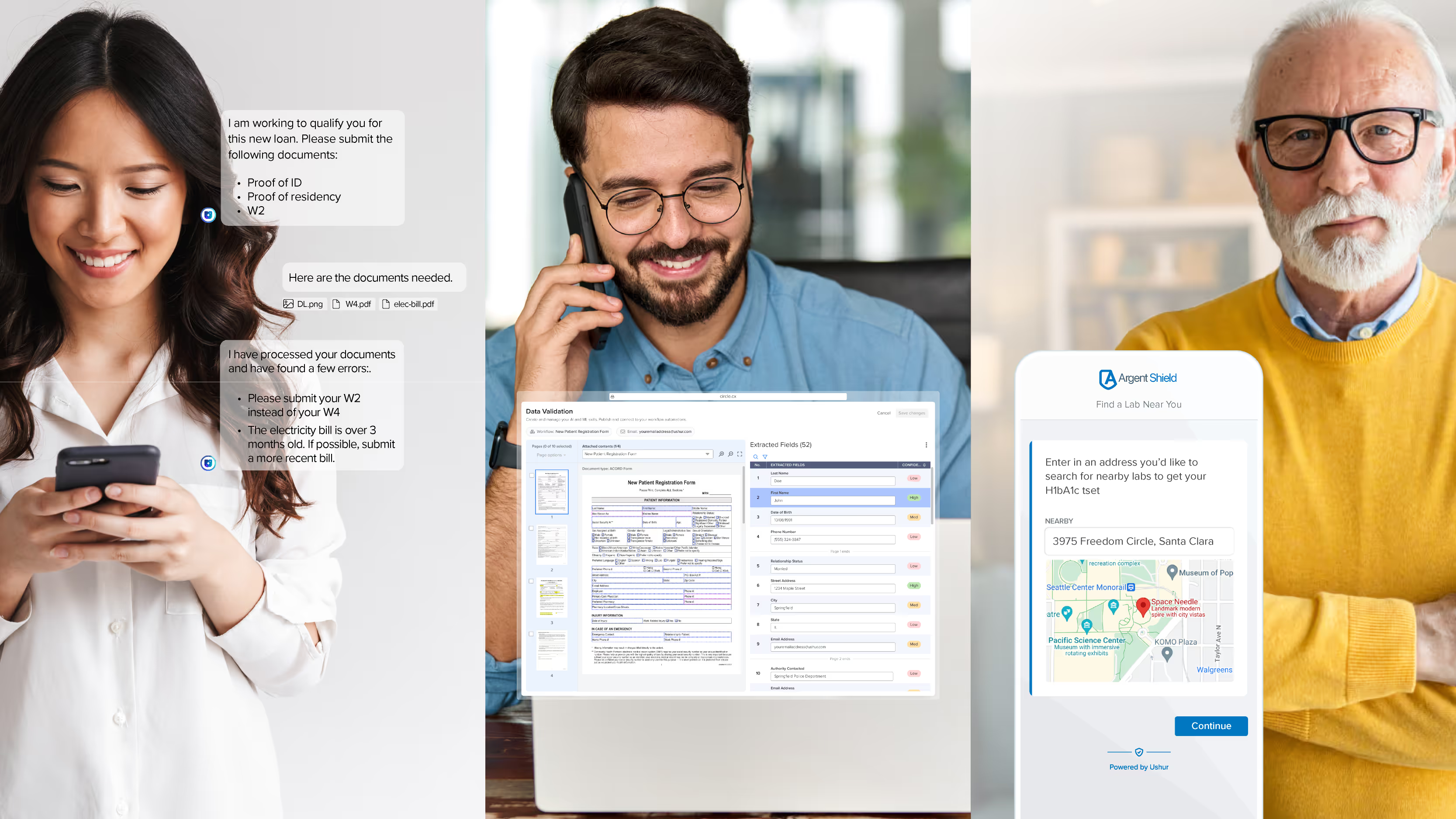

Real-World Examples in Business

When orchestrated correctly, these systems can transform core business processes.

- Customer Experience (CX): A query understanding tool first identifies a customer’s intent. A knowledge retrieval tool then uses RAG to find relevant FAQs or order histories. A response drafting tool writes a personalized reply, and an escalation tool monitors for frustration and hands off to a human agent when needed.

- Insurance Claims Processing: An intake tool collects incident details. A policy retrieval tool searches the customer’s policy documents via RAG. A claims analysis tool checks eligibility rules, and a decision tool recommends an action, quoting the exact policy text for the human adjuster to review and approve.

- Healthcare Plan Member Support: A benefits verification tool instantly checks coverage based on the member’s specific plan. A network directory tool helps locate in-network providers. For more complex cases requiring personalized attention, the system seamlessly escalates to a human care coordinator.

A Practical Roadmap for Adoption

The journey to leveraging multi-capability AI is a marathon, not a sprint. A realistic adoption strategy focuses on solving tangible problems and building trust over time.

- Phase 1: Start with a Pressing Business Problem Instead of searching for a "multi-agent solution," identify a high-impact business process that is currently manual, slow, or error-prone. Look for a technology partner whose platform can solve this specific problem while offering three crucial things: visibility into how it works, control over its decisions, and the extensibility to connect with more of your systems over time.

- Phase 2: Expand and Integrate Capabilities Once the initial solution has proven its value, begin expanding its capabilities. Integrate it with more data sources (like databases and APIs) and add new skills. This is where the power of RAG and CoT can be scaled to make the system smarter and more reliable within its defined domain.

- Phase 3: Introduce Collaborative Workflows With a foundation of trusted, integrated capabilities in place, you can start orchestrating them to handle end-to-end processes. This is where you truly begin building multi-capability workflows. It’s generally a good idea to start with active human supervision, requiring approvals at key handoff points between different tools or automated steps. The goal is to develop a sense of trust in the AI’s ability to operate correctly as part of the collaborative workflow.

- Phase 4: Mature Toward a "Digital Workforce" (Year 2+) Over time, as the system proves its accuracy and reliability, you can gradually reduce the level of human oversight for routine tasks. The long-term vision is to cultivate a team of "digital workers" that collaborate intelligently to handle entire business functions. This requires an enterprise-wide strategy for governance, knowledge management, and defining how human and AI teams will work together most effectively.

.avif)

Looking Forward: Smart Teamwork Over Risky Autonomy

The future of AI in the enterprise isn’t a fleet of fully autonomous black boxes. It’s a hybrid workforce where AI-powered systems handle certain tasks, organize information, and provide fact-based recommendations, while humans provide critical judgment, strategic thinking, and ethical oversight. By starting with specific problems and choosing solutions that prioritize control and transparency, businesses can gradually evolve toward a future of powerful human-AI collaboration, unlocking immense productivity gains without giving up the driver's seat.